Introduction

In a 1937 article, the Nobel Prize-Winning author Ronald Coase wrote the "Nature of the Firm". In this article, he laid down the conditions for how people organize to produce goods and services. For Coase, the two main ways of producing were with individuals in a market ruled by prices, and employees grouped in a firm. Markets are an efficient means of creating wealth but Coase argues the best way to serve the requirement of customers was through the firm. Indeed, according to Coase, the firm structure allows reducing the coordination cost mandatory for any collaborative project. But this theory was only valid until the digital age!

A new form of collaborative work has emerged with the digital age where individuals contribute without the border of a firm. These collaborative productions can be open-source software like Linux, Node.js (a javascript webserver), culture, or information like Wikipedia. Following Coase article and his 2 models of production, Yochai Benkler wrote in 2002 an essay called "Coase’s Penguin, Or, Linux and ‘The Nature of the Firm.’" to add a third model, now possible with online collaboration, that he called "commons-based peer production (CBPP)". This new model of production relies on decentralized information gathering, self-organization, and most of the time, no financial incentive. This is an incredible age, where thousands of people can participate in projects beyond the limit of their firm. After the first wave, driven by individuals, companies now embrace this philosophy as it brings a sense of belonging to employees and serves the companies as they benefit from contributions from outside their labor force.

Open-source produces code libraries to be used as building blocks in larger projects but it also produces standalone applications. In the latter, modularisation and the cloud are transforming how we develop web applications and as a result, will change how people collaborate on open source projects.

Web applications are now distributed

When I started coding fifteen years ago, a web application was a big program that handled everything. User accounts, email sendings, file storage, etc. were all coded in a single program.

Two limitations emerged with this approach:

- First, when several people work on the same program, the code base grows quickly, and the complexity increases. Developer changes can conflicts when they edit the same code and it brings overhead in code maintenance. Furthermore, at some point, it becomes impossible for a single mind to keep the whole program modeled in his head.

- And it became obvious developers were reinventing the wheel and spending too much time on basic features.

The solution was to split the application into several services. Each service is responsible for a subset of features and can be maintained without interfering with other service's code. Services can be developed by the application developers or can be maintained (and sometimes host) by other providers.

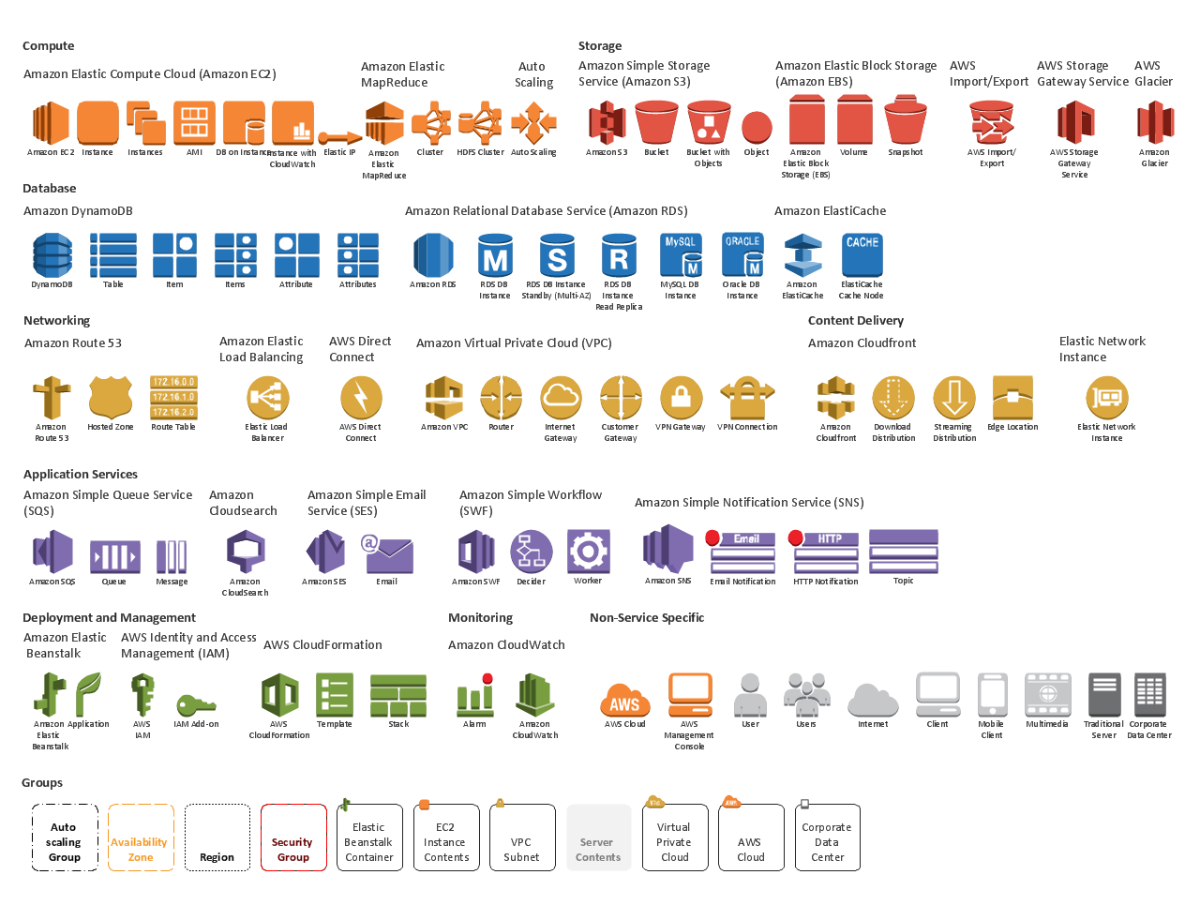

Amazon and its Amazon Web Services (AWS) understood this shift before anyone. AWS started with infrastructure services but now provides high-level services to deal with authentication, emails, push notifications, analytics, machine learning, etc. Amazon teams develop the services, update them when security fixes are needed and take care of the availability.

With this transformation, the infrastructure is now an integral part of the whole application. It is not enough to have the software code idle in Github to be able to run it, the intelligence from the cloud infrastructure is required. How do the services communicate with each other? How do you share settings between services? How errors are handled when a service is down? How do you deploy a new service in this mesh?

This transformation has been going on for some years in closed source applications. For open-source applications, it is harder to define this infrastructure intelligence in the code repository. Today, serverless technologies can change how open-source distributed applications are built and move code collaboration to the cloud.

Serverless technologies will change how we consume open source software...

The open-source software distribution model currently consists of choosing between starting from the source code and self-deploying it, or using a SaaS model some companies have built on top of open-source software. In the latter, the software is hosted on instances paid by the editor and the editor generates revenues with licenses. The editor's role is to make a profit between what the instances cost and the revenues the licenses generate.

For example, the WordPress software code is open source and can be self-deployed or used directly using the SaaS plan provided by Wordpress.com.

Now, imagine what the serverless infrastructure brings. Take an application built around serverless technologies. The application is hosted on a cloud platform, ready to run. Obviously, it is made of compute serverless services to run code, but also serverless databases, serverless cache instances, serverless queue services, etc.

Serverless computing is a cloud computing execution model in which the cloud provider allocates machine resources on demand, taking care of the servers on behalf of their customers. Check the Serverless definition on Wikipedia.

When a customer runs the applications, the cloud platform allocates resources on-demand to launch and run the needed services. At the end of the execution, it is possible to count each service invocation and bill the customer.

In this model, the starting point is not a Github repository but a ready to run application hosted in a cloud platform App Store.

... and bring collaborative cloud platforms

Serverless technology is still new, yet we can already witness the change.

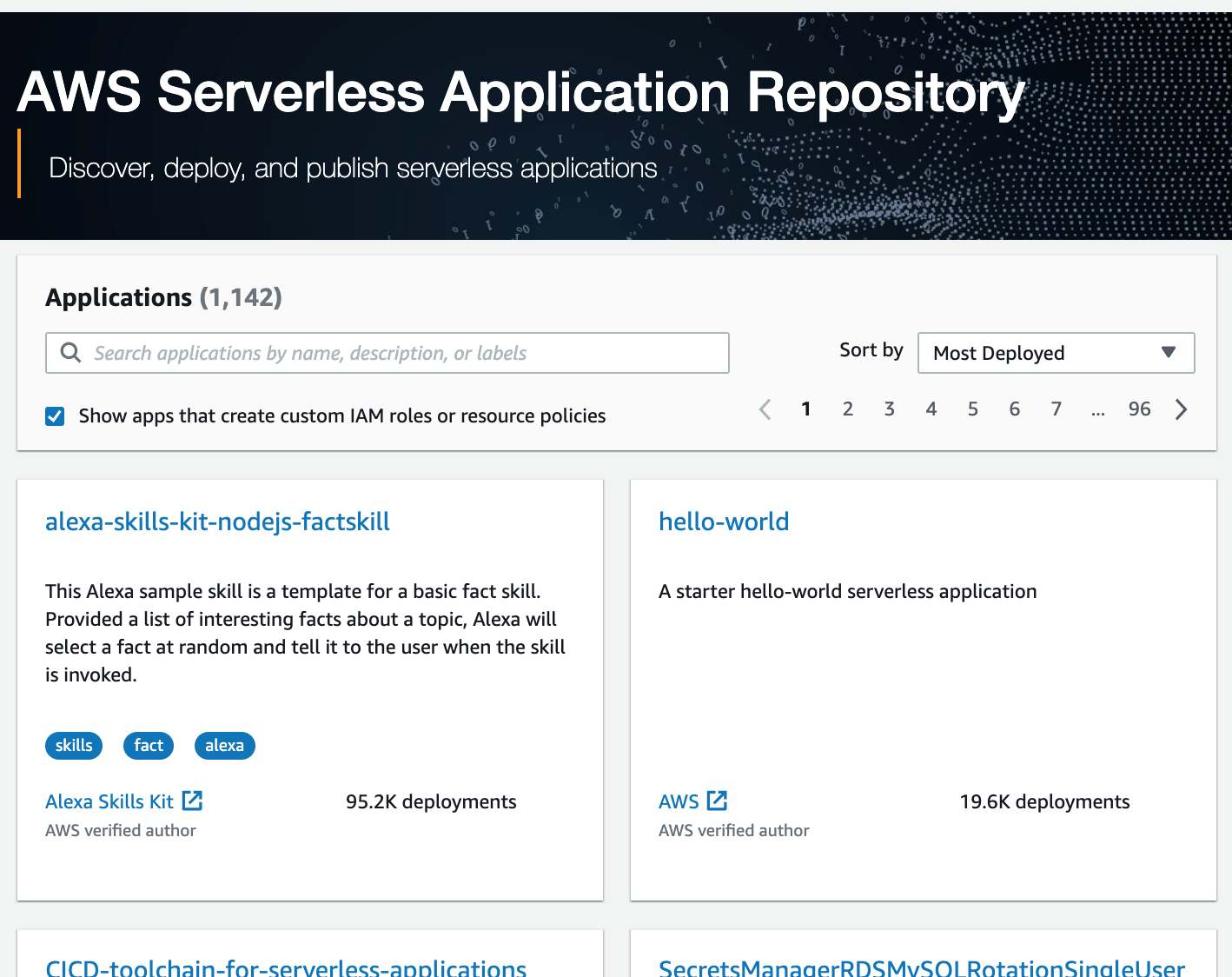

AWS Serverless Application Repository

Amazon Web Services platform's primary role is to distribute AWS services but they release in 2018 their Serverless Application Reposity. An example is this service to handle image manipulation. AWS has a leading role in serverless and it would make sense for the other cloud providers to follow. Google currently has a marketplace to run third-party solutions but the marketplace doesn't provide software with serverless pricing.

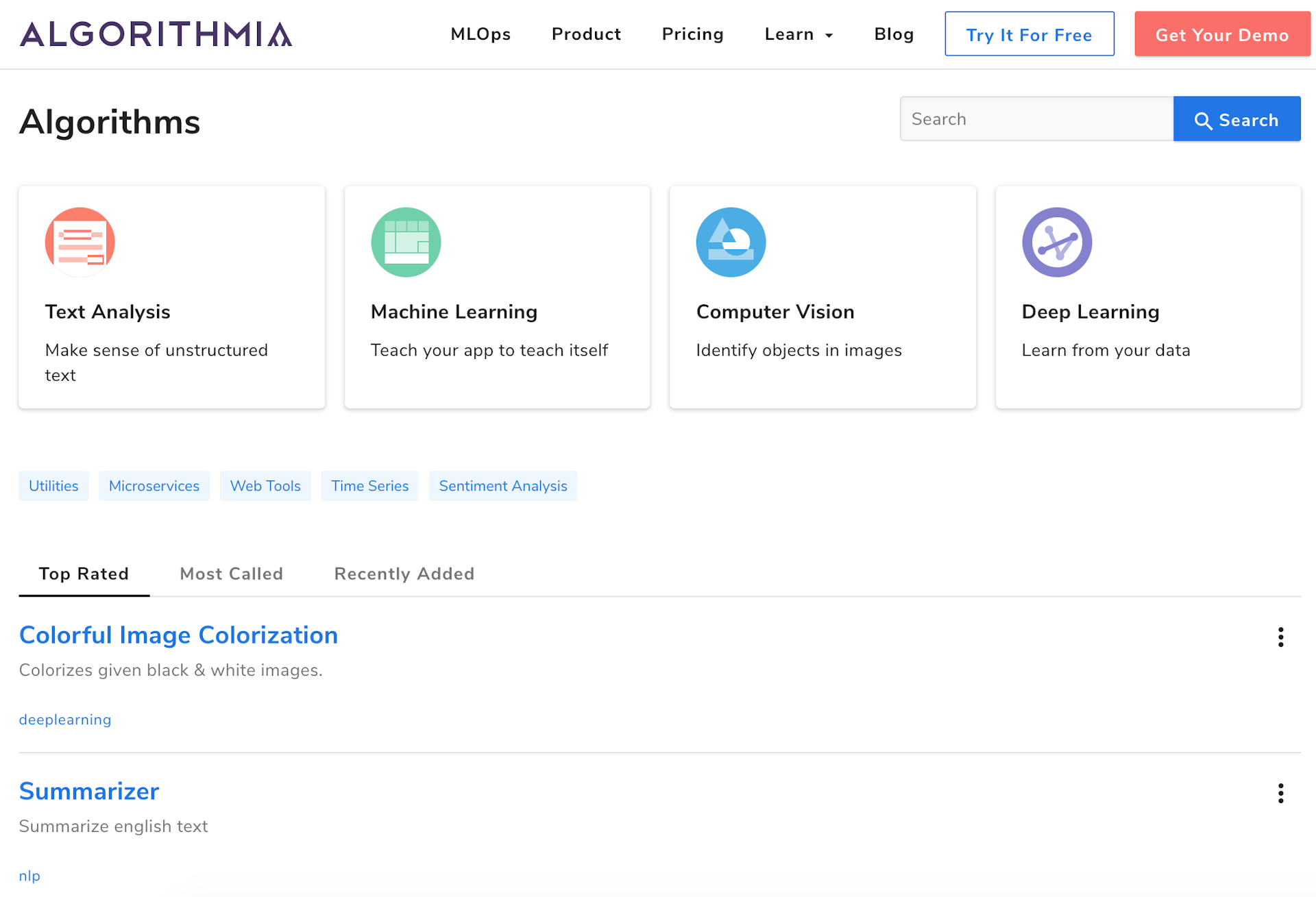

Algorithmia

Another example is the Algorithmia services marketplace. Alorithmia is a serverless cloud platform to host machine learning models. Anyone can publish its trained models on the marketplace for others to use. A published model exposes an API. Hosting and scaling of the underlying service are managed by Algorithmia. Publishers can also charge a commission fee for the execution of their services.

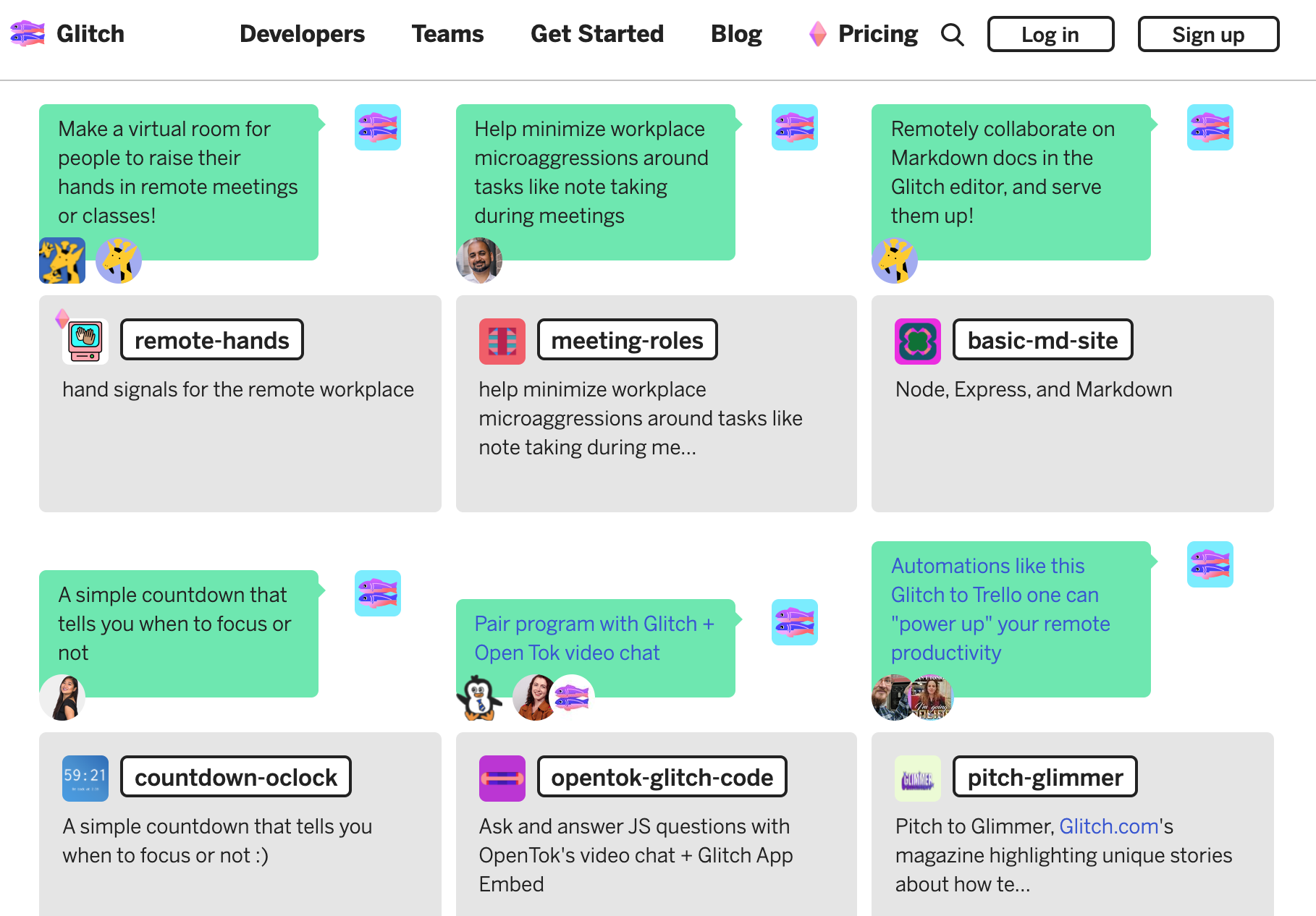

Glitch

I love Glitch. I love the story and the company culture. Glitch is a collaborative coding environment where the applications are always "runnable". Users can create applications from scratch or duplicate open source applications from other users. Once someone accesses the application, Glitch runs it on a serverless platform and the instance is stopped after 5 minutes of inactivity. Glitch blends both code hosting and application hosting in a collaborative cloud platform.

Datablist

Datablist is a database platform to store any kind of items and to trigger actions on them (manually or using automation). For example, you can trigger enrichment actions on people items, web scrapping actions on URLs, OCR (optical character recognition) actions on PDF, etc.

Under the hood, every action on Datablist is a serverless service called whenever a user runs the action. All the actions available on Datablist are listed in an "Actions Directory".

Users can use actions from this Actions Directory or code their own actions that will be hosted and run on Datablist.

An important concept on Datablist is to have common data types shared by all users. It allows having generic actions on data types and properties: People, PDF, URL, etc. Because they are generic, an action created by a user can be published on the "Actions Directory" and be available to everyone.

By using tens, hundreds, or thousands of actions on your data, and by automating when and how each action is triggered, you end up building applications. An application being a mesh of actions. The goal is to have applications that suit your needs while you benefit from community actions, so you don't have to maintain each service.

If you share our vision, subscribe to our newsletter below and join our beta program.