Zero code, lots of AI. This is how we made scraping e-commerce sites easier than ever.

You don't even have to know how to prompt effectively because we provided a prompt and the instructions to enable you to extract all products you need from any e-commerce store.

Enough with the words, let's scrape products!

Step 1 – Understanding E-Commerce Site Scraping

There are two ways to scrape products from e-commerce sites:

You can either:

-

Scrape "Collection/Category" pages to extract selected products from an e-commerce site ⬅️ This is the method we'll apply today

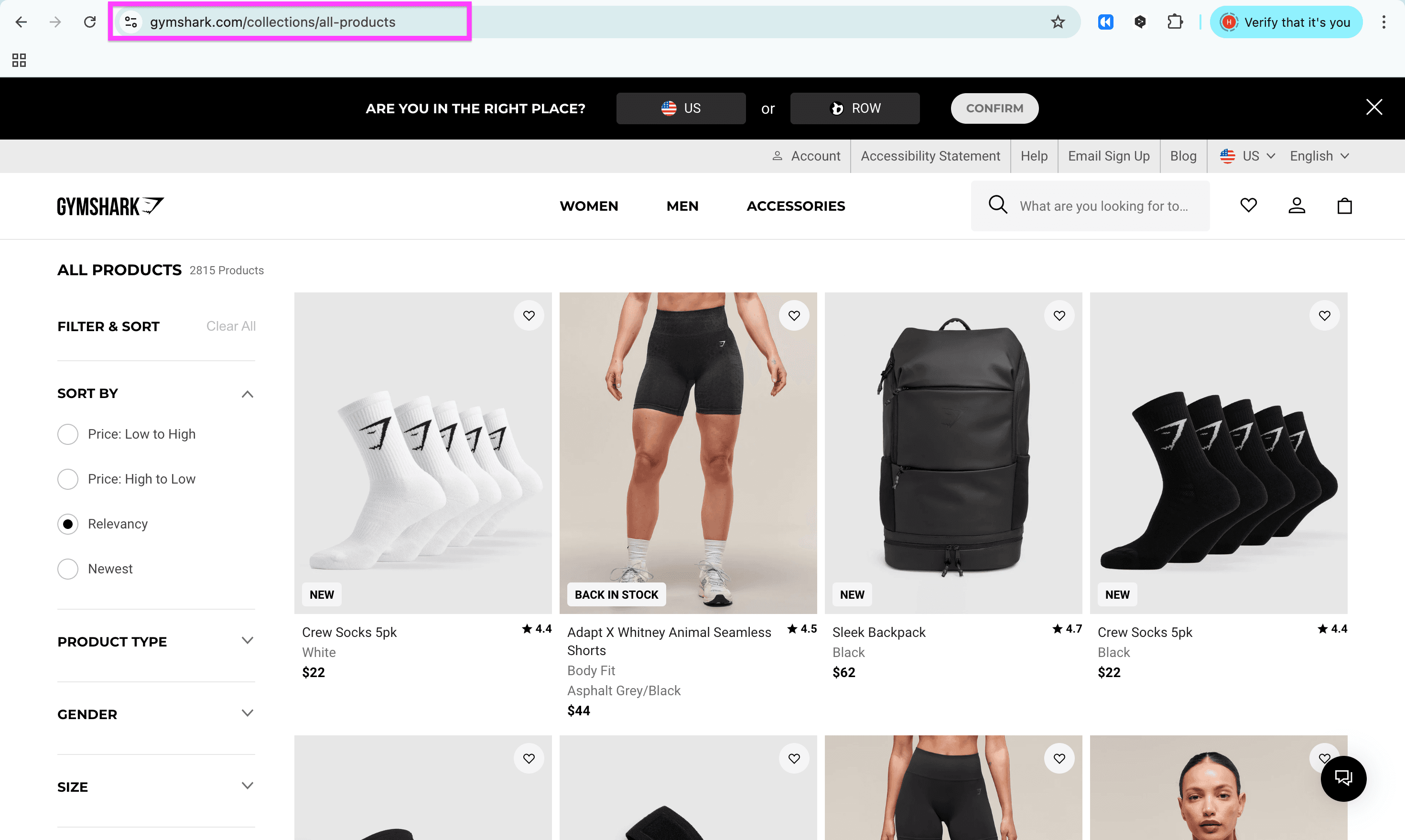

This is the category page I’ll use to scrape all products from -

Scrape the "All Products" pages to extract all products from an e-commerce store

“All products” pages offer less control about the extracted products

The configuration for the AI agent works in the same way, only the starting points are different.

Step 2 – Preparing the Scraping of E-commerce Sites

Preparation is half the battle, right? Here's what we will do to prepare the scraping of the e-commerce sites:

- Get the links of the e-commerce sites you want to scrape

- Sign up for Datablist

- Create a collection in Datablist

Let's begin!

To scrape products from e-commerce sites without code, you need a tool that lets you do this in plain English. One of these tools is the AI Research Agent in Datablist.

Once you've signed up for Datablist, you'll need to set up your automation. I'll explain how!

Create a new collection by clicking on the plus, or just use the shortcut "N"

This is what you’ll see when creating a new collection. I’ve already renamed my collection (I gave it a nice emoji too)

That’s it with preparation. Now comes the action.

Step 3 – Getting Started with the Scraping of E-commerce Sites

To get started with scraping products from e-commerce sites, you need to do 3 things:

- Choose the "AI Agent - Site Scraper" as a source

- Paste the prompt that explains to the AI Agent what your goal is

- Create an output property for each product information you want to scrape

Now that we have outlined everything we are going to do — let's start scraping!

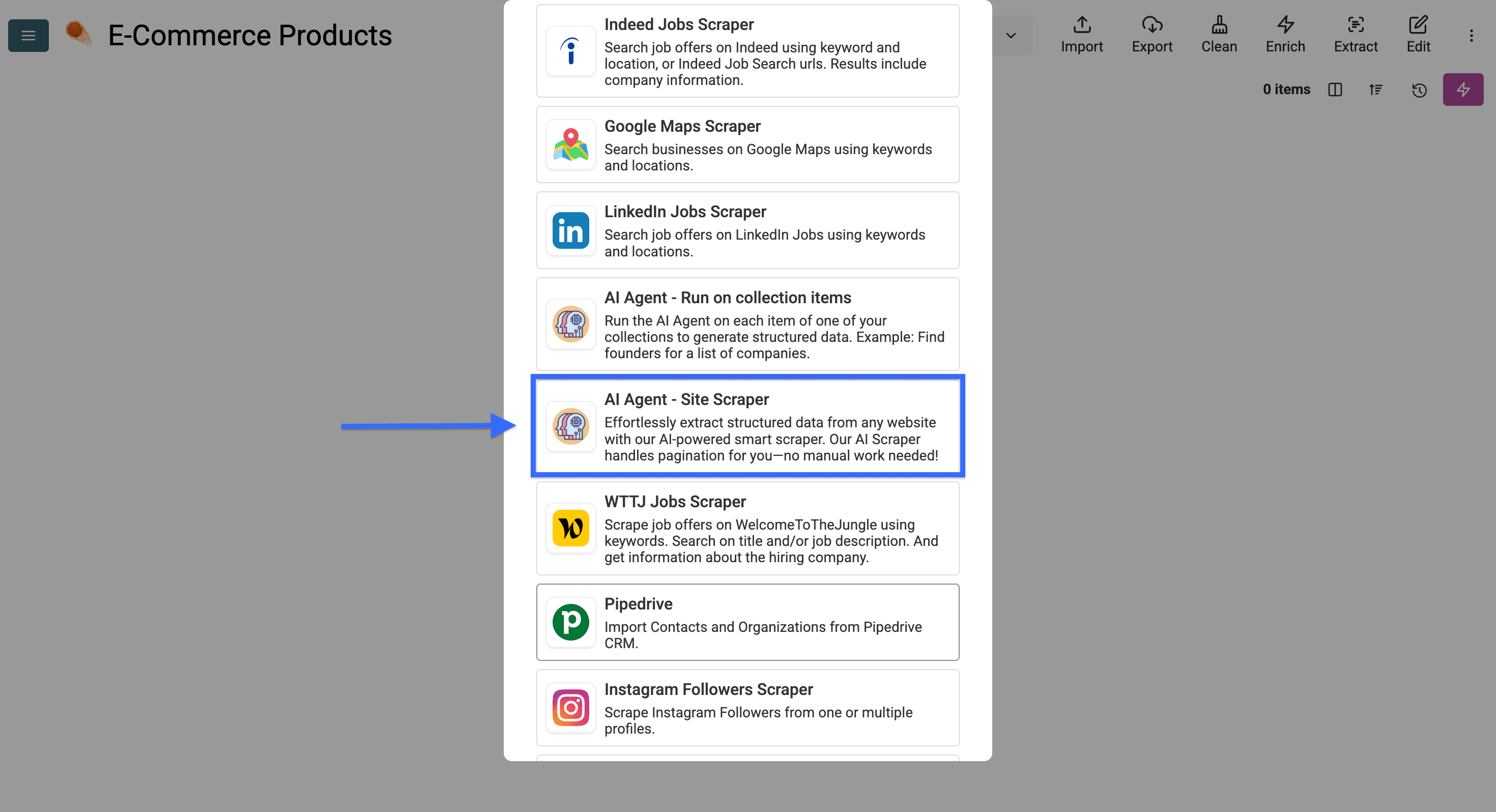

Click on "See all sources" to start configuring the AI agent for scraping

Now select the “AI Agent - Site Scraper”

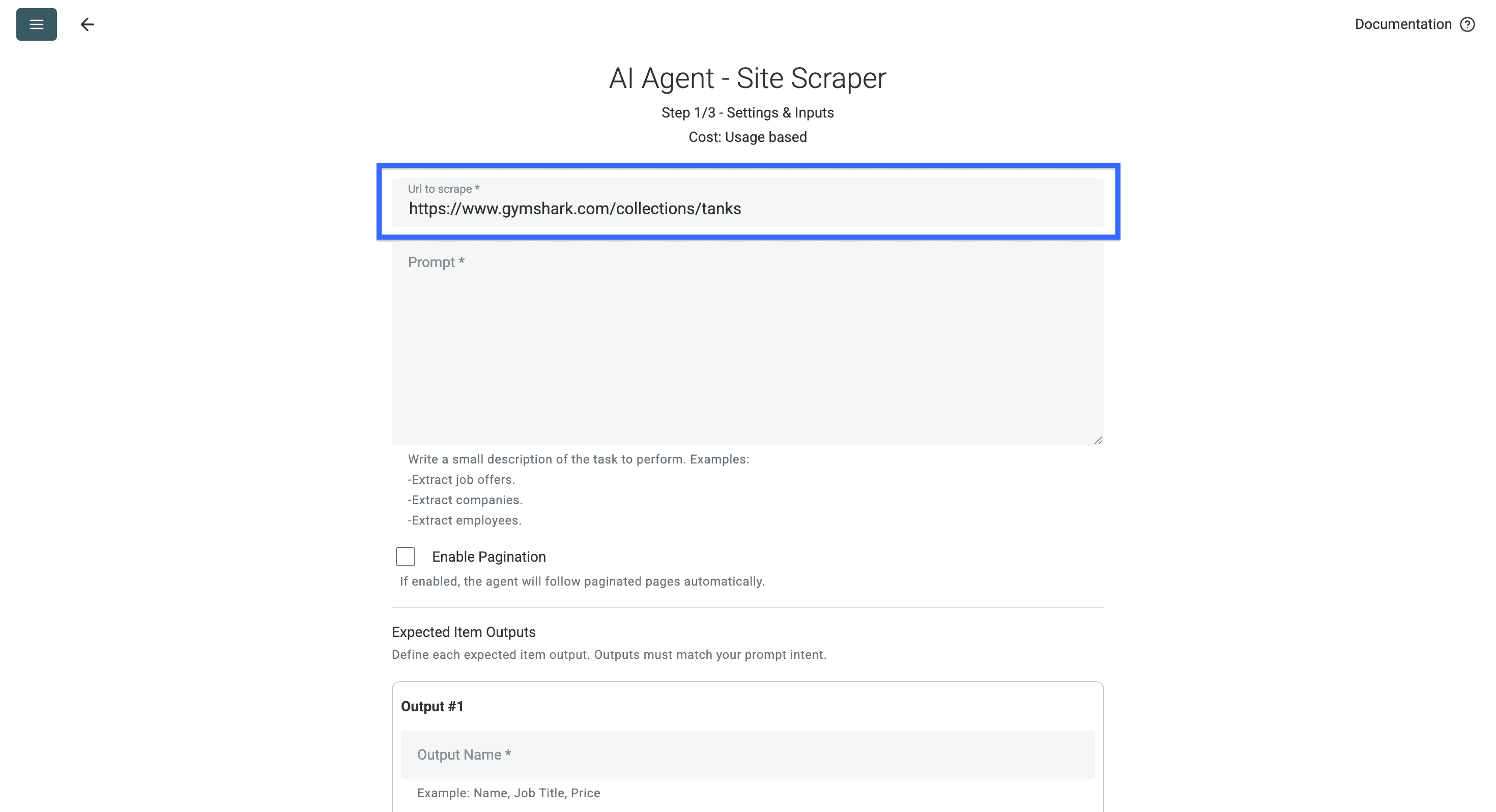

Paste the URL of the site you want to scrape products from in the first field.

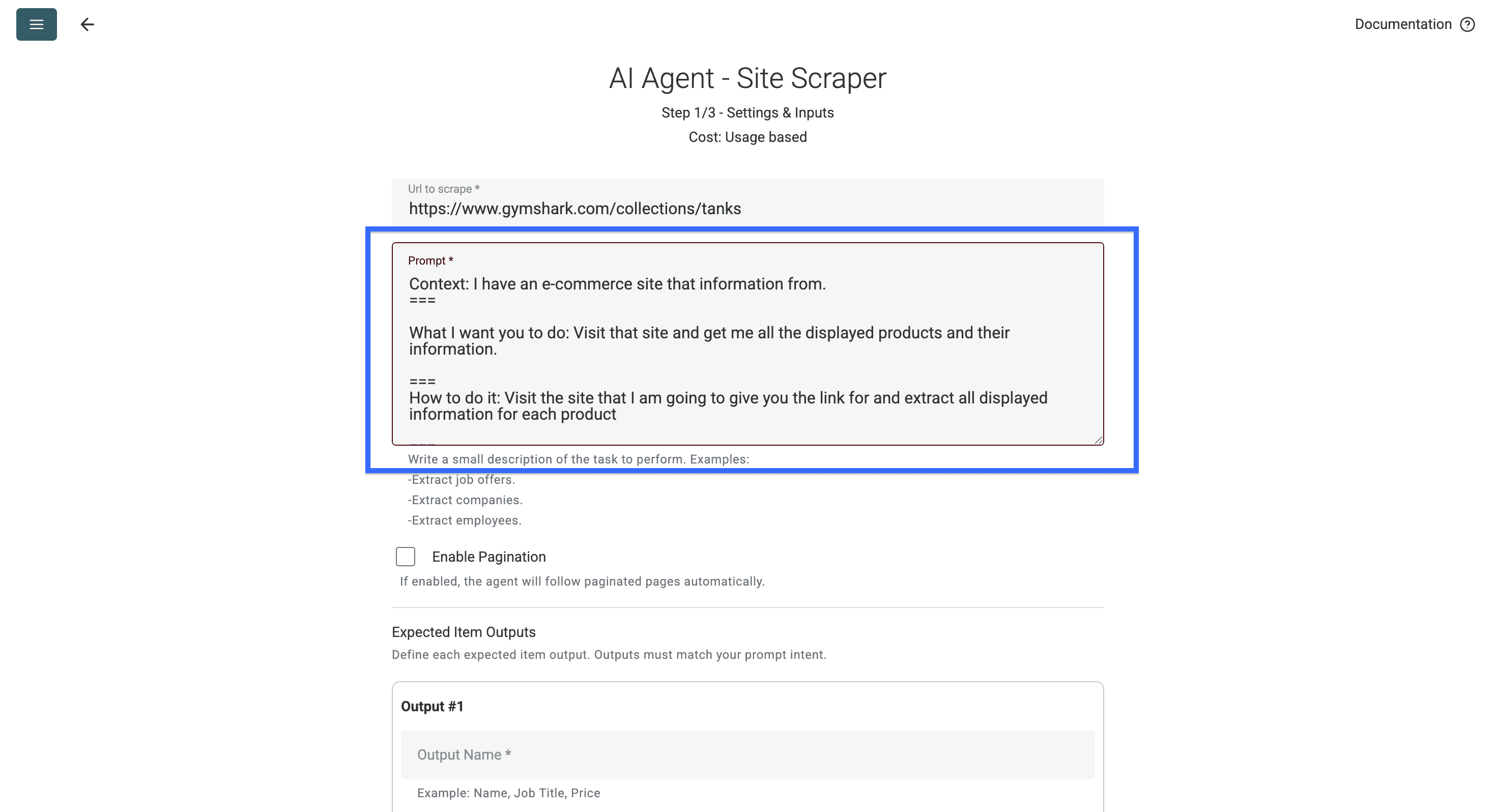

Then type in a prompt explaining to the AI agent what information you want to scrape from that e-commerce site (you can also use my prompt below)

Context: I have an e-commerce site with a listing of products.

===What I want you to do: Visit that site and get me all the displayed products and their information.

===How to do it: Visit the site that I am going to give you the link for and extract all displayed information for each product.

===Important mention about the task: Don't extract any information that isn't linked to a product, e.g. call to actions

===Here's a description of what we are looking for:

- Name of the Product

- Link to the product page

- Original Price of the product in the displayed currency

- Product category: (examples: Nutritionally Complete Instant Meals, Tank tops, Socken)

- Product specification 1: (examples: Compression fit, 40g protein, Premium Füßlinge)

- Product specification 2: (examples: Color, pieces, servings)

- Special Tags: (examples: New, limited edition, last chance etc. Return "None" if there none)

- Absolute link to the product picture

- Discount in % (if available. Return "None" if there's no discount)

Don't return anything what doesn't fall in these data types and return only one piece of information for each type

===Important mention about the data: Not all pages are structured in the same way but the products are all labeled well enough that you should be able to recognize the distinctions between the data point.

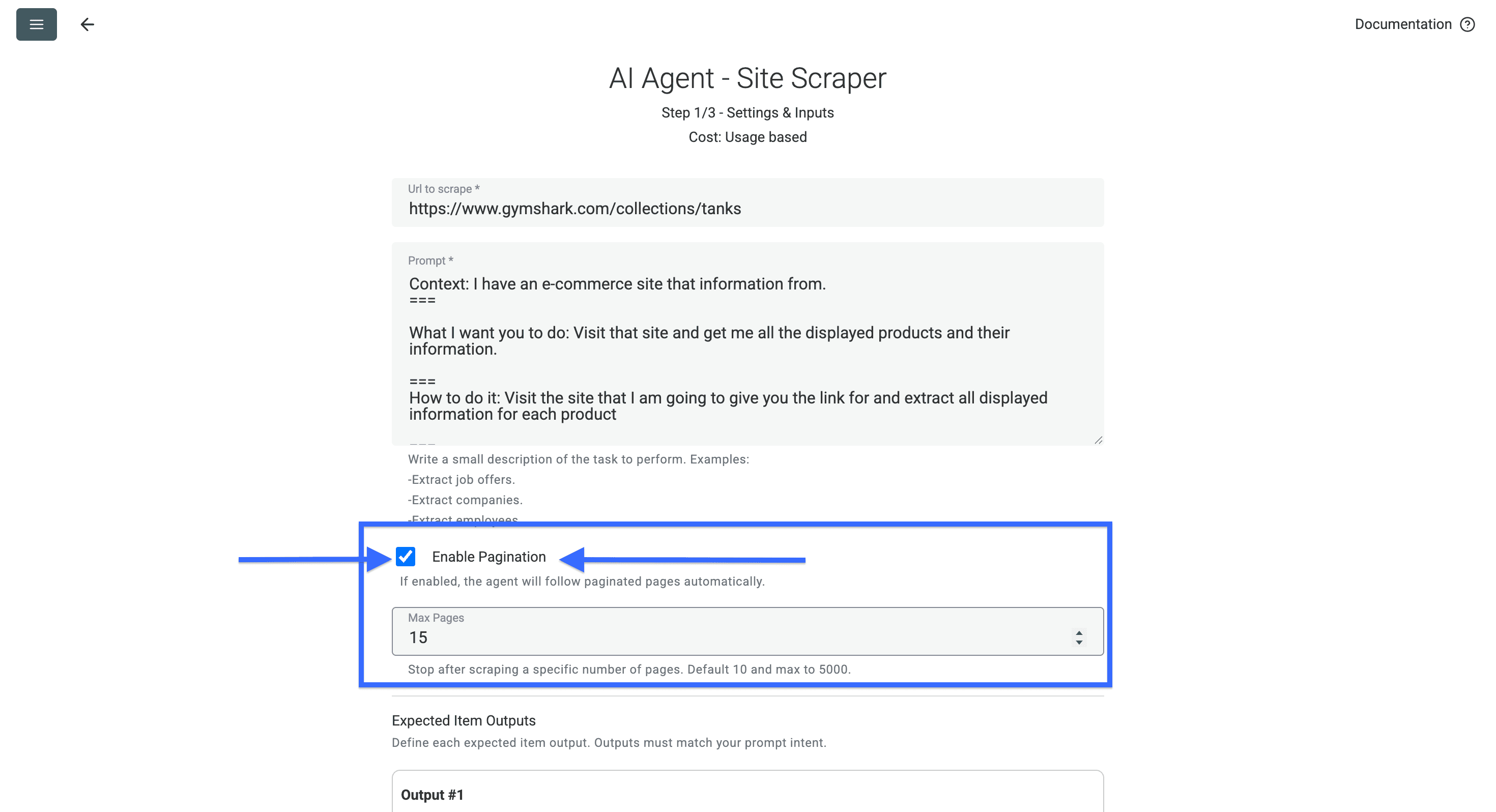

Now check the Enable Pagination box so the AI scraper can automatically go to the next page after it has scraped the first one.

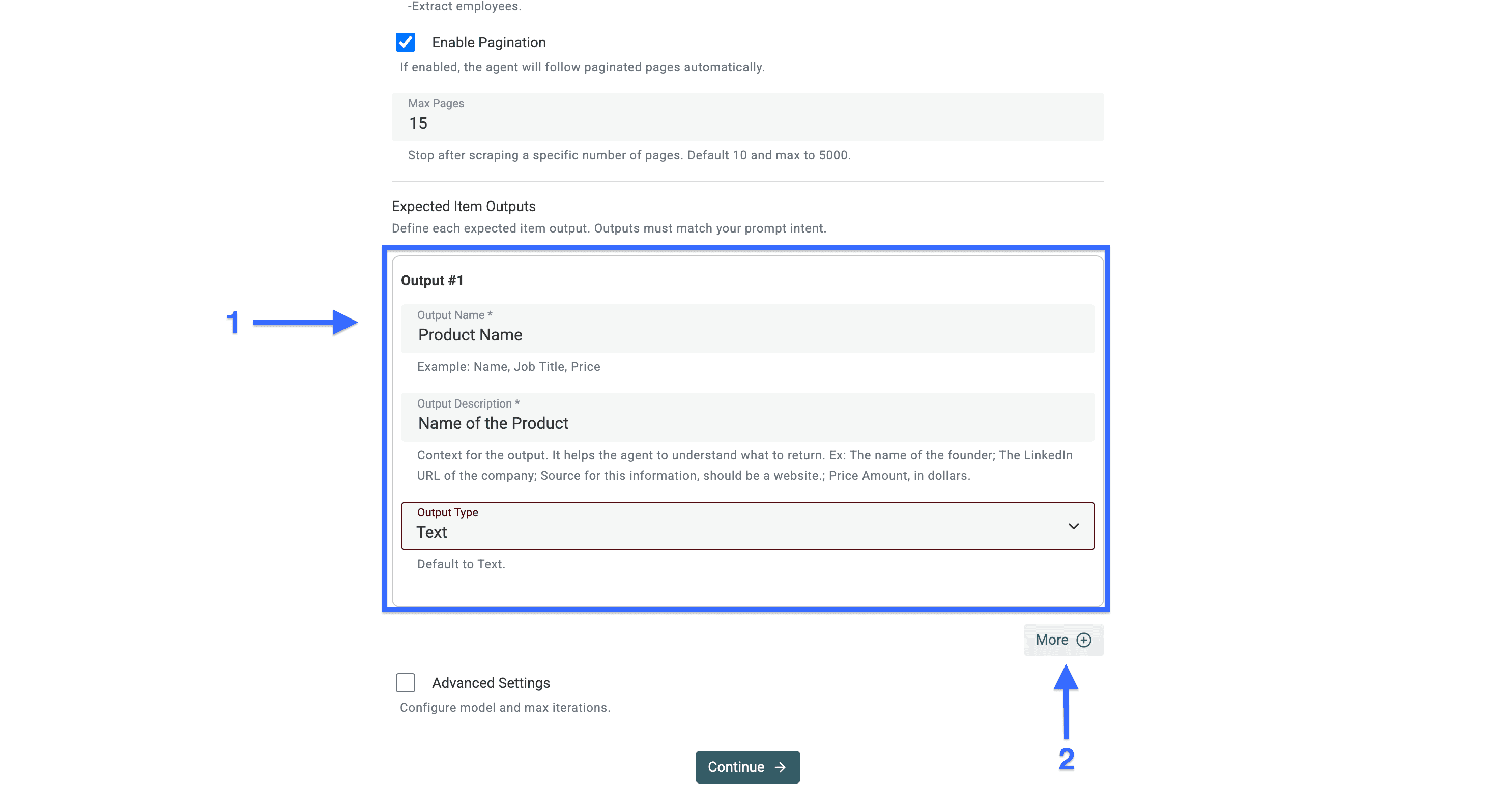

Now you have to create an output field/column for each type of product information you want to scrape.

Click on "More" to create more output fields, and continue this process until you have an output field for each information type

If you need the product specifications stored separately, create a field for each product specification. Here's an example:

💡 Do This for More Accurate Scraping Results

Give the AI Agents explicit examples of the product specifications you want to have. Here’s an example based on GymShark’s Tank tops (the picture above)

Product specification 1: Slim Fit

Product specification 2: Black

Once you have created all the output fields for the information you want to scrape, click on the checkbox for "Advanced Settings"

Now you can:

- Specify the model you want to choose for scraping ⬅️ We recommend using GPT-4o mini for best "performance to price" ratio

- Select the maximum number of iterations you want the AI agent to make. Click here to learn more about AI agents

- Enable the “Render HTML” option to enable the AI Agent to scrape javascript rendered eshop – 🚨 This is mission-critical for some e-commerce shop. You can try without first and retart the scraping with this setting if the first run didn't yield result

Once you enabled it click on “Continue”.

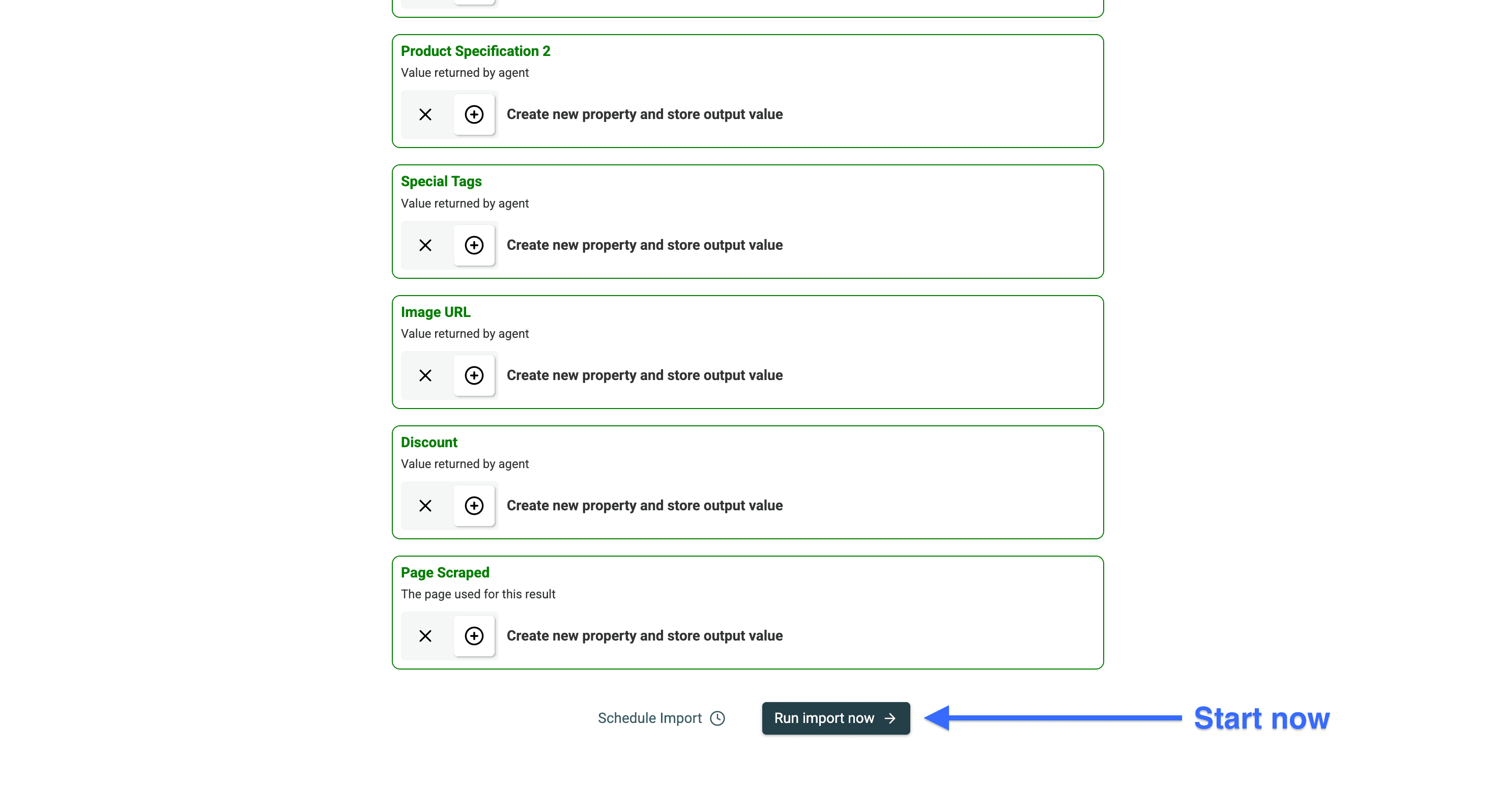

Then click on "Run import now" to start scraping the product information from your list of e-commerce sites

Here are the results you will get from the website scraper AI Agent.

💡 Do This to Avoid Duplicates

Select a unique value such as product link or product name to not import the same product twice when scraping the shop again then, when you want run it again, it will only add the new items. — you'll still pay for the not imported products since we can only prevent the import, not the scraping

See instructions below ⬇️

First, select your unique identifier. In my case, I'll take the product link, but it could be something else for you.

Then click on the column header and select "Rename - Settings - Delete"

Now check the box that says "Do not allow duplicate values" and click on "Save Property"

Once you've done this, you're all set and will have each product from the store appear only once in your collection, even if you scrape that e-commerce store multiple times.

Now you should also see a key icon in your column header that confirms this.

Conclusion

Scraping e-commerce sites and stores without code is possible — just use an AI agent that can do this automatically for you. The only thing you should really focus on is your prompt, or you can just use the prompt we provided, but be sure to insert your own examples in the parentheses to scrape the product information that are relevant to you.

Can I Scrape E-commerce Sites Without Code?

Yes, Datablist's AI allows you to scrape e-commerce sites using natural language commands. You simply need to write instructions in plain English and the AI agent will handle the technical aspects.

How to Monitor Price Changes on E-commerce Sites?

You can set up Datablist’s AI agent with recurring tasks to automatically monitor and track price changes. The agent will periodically check the sites and record any price updates.

How to Scrape Product Information from E-commerce Sites?

Create an AI agent, specify which data points you want to collect (like prices, names, descriptions), and provide the URL of the e-commerce site. The AI will automatically extract and organize the requested information.

How to Scrape Multiple E-commerce Websites at Once?

No, this is currently not possible, but you can create multiple collections and scrape one site/store at a time with Datablist. Simply configure the AI agent with your desired parameters, and it will automatically scrape all the products for you.

Is Scraping Websites Legal?

Web scraping itself is not illegal, but some websites explicitly prohibit scraping in their terms of use.